How we managed to increase organic traffic by 347% in a year

The central figure of this case study is a project run by a group of experts. Its purpose is to provide, reliable, comprehensive, helpful guides/reviews on home weather stations and any related accessories available on the market. Their mission is helping to choose the best weather tools for their individual needs. Their target aren’t just weather enthusiasts – they’re here to help companies, farmers, and various other people that use home weather stations.

The client’s business model is based on affiliate marketing. What this means is that there is a collaboration between the seller (advertiser) and the partner – affiliate (in this case, our client) who mediates the sale of a given product. By creating a new distribution channel for the seller of these products by the partner, the affiliate accepts the remuneration for the sale of a given product.

How we came to work together?

The client asked us for help at the beginning of August 2018. After the first analyses, we’ve noticed that the client’s specialization is relatively niche, while the content on the site was quite rich and exhaustive. However, this was not enough to make the website’s visibility satisfactory. And so we started with planning a strategy for the upcoming months. We showed it to our client who, without a shred of doubt, decided to entrust the SEO campaign to us.

The main goal that we’ve mutually agreed upon was a substantial increase in organic traffic on the client’s website.

Let the game begin: technical details.

1. Redirecting

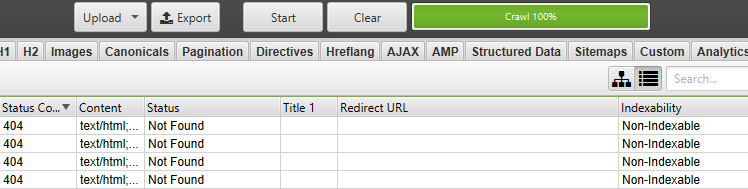

Navigation that utilizes internal redirects is detrimental to both the user experience, as well as the optimization of the search engine robot navigation through the site’s structure. This has a negative impact on the time of indexing and evaluation of a given subpage. We have eliminated unnecessary redirects from the client’s website, which has not only improved the site usability but has also had a positive impact on the crawl budget and its usage.

TIP

TIP

| For this type of analysis, you should use a special tool whose behavior is similar to that of a user: it’s supposed to check each page of the site by following each link it finds. This allows you to find all technical problems on the page that are related to error pages. Some examples of this type of tool include ScreamingFrog, SiteBulb, DeepCrawl. For a basic analysis of a small website (up to 500 URLs), a free version of ScreamingFrog would be a good solution.

|

2. URL Structure

The existing URL design has been modified in order to create a new, more optimal structure for subpages and categories. At the start of the optimization process, the transitional category pages contained the noindex, tag, which resulted in an incomplete, and therefore unnatural, site structure tree in the Google index.

We have therefore decided to create a subdirectory structure and allow the search engine to freely index these URLs. We have prepared content describing these categories in an appropriate manner. This optimization of sub-pages has significantly increased their value for users and search engine robots alike.

In the meantime, we suggested that the client create additional topical articles. With this type of website, it is important to make sure that the topics touched upon in the articles are actually helpful to your potential readers / future buyers. The niche market creates powerful opportunities, while also introducing limitations that should be taken into consideration. In this instance, there’s always a risk of describing a topic in too general terms (e.g. “weather stations”), and that’s not far off from keyword cannibalization.

Bearing in mind all the factors mentioned above, we were on the best track to create a website structure that is search engine-friendly and that enables the website to reach its maximum potential.

3. External linking / Backlinks

One of the elements having a negative impact on the visibility of our client’s website in the search results were toxic links leading to his website. According to all studies and analyses, Backlinks are one of the most important ranking factors in the Google algorithm. Because of this, we’ve paid special attention to this area. Spam comments and websites of dubious quality could lead to the imposition of an algorithmic penalty.

We started the process of improving the situation off with “renouncing” the low-quality links by submitting their list in the Disavow Tool, which has, in a relatively short time, led to a noticeable increase in organic traffic. In addition, our outreach team has been constantly obtaining valuable links from sites related to the customer’s niche.

4. Internal linking

We’ve noticed that there are many articles, reviews and guides on the client’s website that are closely related to each other, so we’ve decided to make use of that in the campaign. Because of this, we’ve suggested that the correlating subpages should link to each other. Not only does it strengthen and improve internal linking, but it also makes it easier for visitors to the site. A user reading an article on a given topic may now directly move from the text level to a product review related to the topic they had just read about.

5. Internal duplicate content

The appropriate evaluation of websites that contain duplicate content serves as a significant hurdle for Google. Duplicates on their own always have a negative impact on search engine robots’ evaluation of a site.

Unfortunately, we’ve detected duplicate content on various pages of the client’s website. Similar subpages in one site competed against each other for the same keyword. Respecting the”Panda” algorithm, we were forced to deal with this problem in order to avoid a drop in range in organic search results. We have combined some smaller articles, using their content to create a more extensive piece. This way, we have created a rich source of knowledge and information gathered in one place for potential clients. At the same time, the removal of internal duplicates has stopped the value of duplicate content from being broken into several subpages. Reducing the number of duplicates also helps Google’s robots understand the importance of each subpage while limiting the keyword cannibalization.

6. Improving content in terms of UX

Some of the articles on the site were presented in a way that made them difficult to read. The content itself was valuable, unique and interesting, but it was a block of text, making it difficult for the reader to navigate and find what they’re looking for.

We have fixed this by dividing the text into sections that have been internally linked to permanent navigation by means of a header or sidebar that will follow the user.

TIP

TIP

| In the case of WordPress – take ensure the appropriate structure of Hx headers and use one of the plugins to generate a table of contents. This will allow you to easily systematize the post structure. This type of navigation can also be implemented manually using so-called “anchors” and assigning the appropriate id to the paragraphs: https://example.com/product-review <a href=”https://example.com/product-review#name”>Product name review</a> <h3 id=”name”>Product name</h3> |

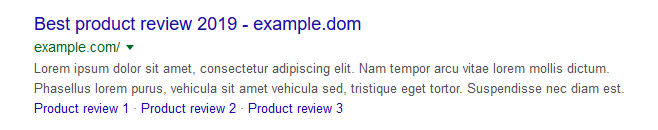

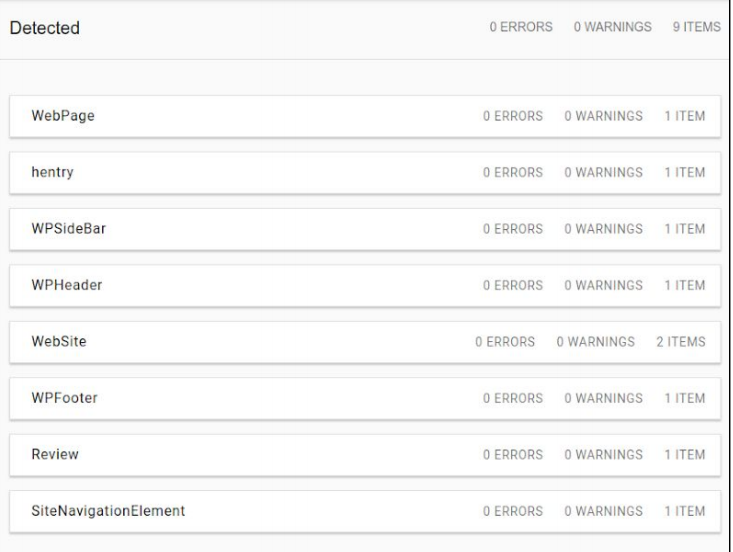

7. Expanded fragments and microdata

On the client’s website, we used the structured data markup scheme (schema.org), which allows the search engine to better understand the meaning of its content. The structured data markup scheme also ensured its greater visibility in search results thanks to extended fragments, AKA rich snippets. In addition to elements such as the title or page description, extended snippets may contain tags such as user rating, photos, information about the author, etc. Displaying them is significantly supported by microdata, and their use allows us to perfectly distinguish the service in organic search results, which significantly improves the click-through rate (CTR), or – in combination with the general optimization of the site – reduce the bounce rate. That’s exactly what happened to our client.

The structured data is, in essence, a summary of what the user can expect from the content on a given page. However, keep in mind that you should never input incorrect information in this manner, as well as data that is not covered by the content of your site. Such a violation may even result in a manual penalty issued by Google.

TIP

TIP

| You can check the validity of the microdata using a dedicated Google tool. Use this opportunity to eliminate all warnings, but especially make sure to eliminate every single error. https://search.google.com/structured-data/testing-tool/u/0/ |

Results – over 300% of the norm in 6 months

The activities carried out, once they’ve been thoroughly reindexed and recalculated by the search engine, have yielded very good results.

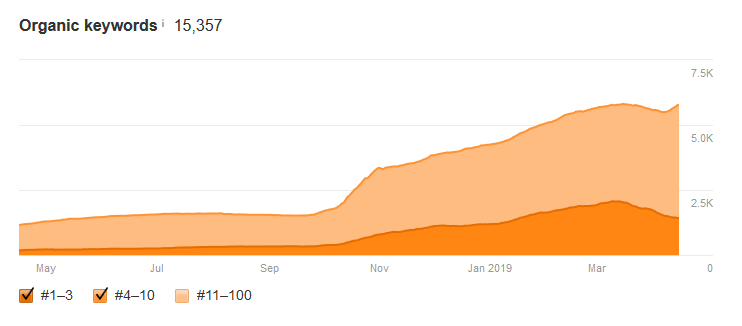

a) 380% more phrases in the TOP10

Over the course of our work, the number of organic keywords in the top10, according to Ahrefs, has increased from 1230 to 4663. This amounts to an overall increase of 380%.

That’s more than triple the initial organic traffic!

Our team’s optimization activities have resulted in the fact that, after six months, the monthly traffic on the site has more than tripled:

As far as Ahrefs goes, of course – and we all know that its predictions tend to be very optimistic. What do Google Search Console and Google Analytics have to say about that?

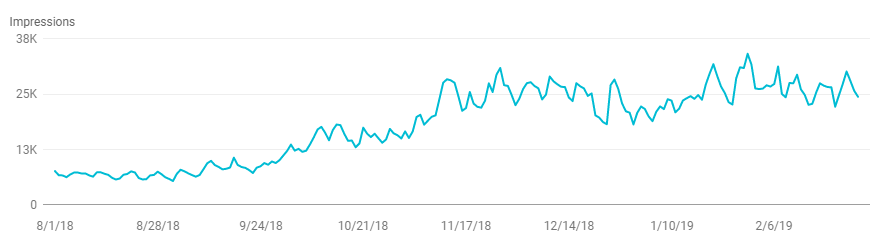

b) 388% more views according to GSC

Google Search Console makes it clear: there has been a noticeable increase in views. In February 2018, we had around 190k views – now that number is over 738k. That’s an increase of 388%!

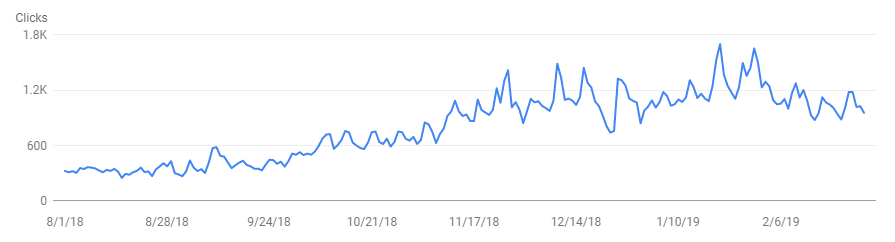

c) 325% more clicks according to Google Search Console

How are the clicks, then? Great, actually! When comparing February (9.26k clicks) to September (over 30k clicks), one thing is clear: there’s been a noticeable growth in clicks – by around 325%!

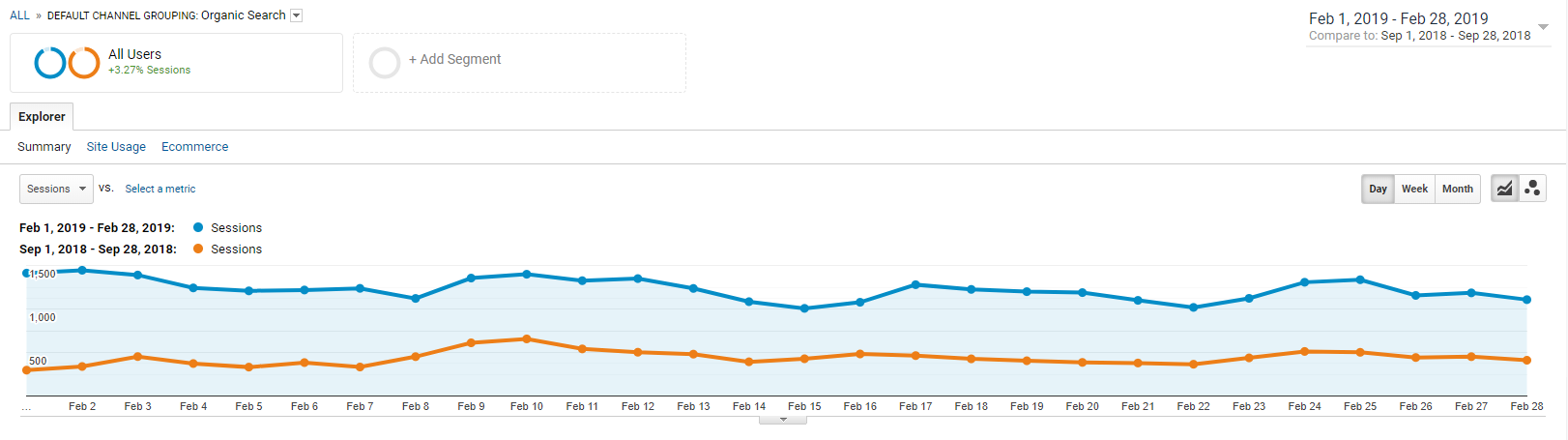

d) 3.5x more in organic traffic over the course of 6 months

Now it’s time to pay Google Analytics a visit and see how things are when it comes to organic traffic. We’ve compared two periods: August (9,896 entrances) and February (32,326 entrances). The numbers speak for themselves. To save you the mathematical gymnastics, however, we’re proud to say this:

We’ve increased organic traffic by around 350% in the span of 6 months!

Happy ending

So there it is: it can be done!

By working closely with the client, we’ve managed to get incredible results that have destroyed all expectations. After all, we’re talking about increasing all the relevant variables by 300-350% over 6 months!!!

Either way, this is far from the only example of Neadoo working with a client yielding such results, so you can expect similar (if not more impressive!) case studies from us in the future.

Will there be more case studies?

Of course! After all, practice is the best of all instructors. For this reason, we will continue to create this type of content for you.

Are we happy with ourselves?

Yes, but we try not to rest on our laurels. We continue to work, believing that we’ll be able to deliver even better results in the near future. If you’d like us to give you an update on this case – let us know in the comments!

Are we bragging?

Yeah, probably… But admit it – we did a damn good job! And that’s not all – we can do similar things for you! Contact us and we’ll take a look at your website, analyze it, and let you know what results you can realistically expect!

Thank you for reading our case study. If you have any questions – don’t be afraid to ask in a comment or write to us at: hello@neadoo.london.

Don’t forget to check out our profiles:

TL;DR:

- plan ahead and carefully think your website’s structure through – avoid keyword cannibalization and internal duplicates,

- block all low-quality subpages – make sure that Google indexes only the most valuable and helpful pages – be rigorous, as it’s better to have 20 subpages that are really valuable than 80 mediocre ones

- make sure your articles are formatted properly – use the potential of microdata (AggregateRating) and, in the case of longer articles, make sure you have a table of contents and internal links to the sections